Am I an Anachronism?

What does a picture of a (very nice) group of people have to do with my blog this week? It was taken at a songwriting retreat, where the main goal of the three days was to collaborate on a song and finally perform it to the group. I collaborated with Pete, on the far right. Our topic was 'Years' and we wrote a song called, 'Time's not on my side any more'.

The genesis of our song and this blog was talking about AI with my friend during the week (before going on an extremely hard bike ride in the back country behind Gibbston). As she walked past me on our way to breakfast, I was working on a funding proposal. “What are you doing?” she asked.

“Trying to get a section of a proposal down to 1120 words from the 2500 words the researcher gave me.”

“Why don’t you get ChatGPT to do that for you?”

The short answer was because I didn't think ChatGPT can do that sort of editing well. ChatGPT can reduce the number of words but it struggles to deliver a text that is both shorter and includes the necessary detail for a research funding proposal. In general, I consider large language models can yet do the work I do, although I could be wrong. The short answer I provided was not good enough for my friend so I had to do a lot of thinking to break down the components of why I was doing the editing without AI assistance.

1) I understand the research proposals I work on by interacting with them. I rarely just read a proposal – I start working on the text at the same time I start reading it. In the process of rewriting I clarify whether I can understand the concepts in the document – to restructure text requires understanding what you are reading and editing. In my experience, improving a piece of text cannot be done at any deep level simply using comments boxes. Improvement requires being deeply embedded into what you are reading, which requires interaction i.e. either rewriting or summarising separately in your own words. Anyone who has studied for exams will remember the best way to really know your material is to rewrite it and summarise it, not just read it.

So, if I got ChatGPT to reduce my 2500 word text to 1120 words, I wouldn’t necessarily know whether the reduced version carried the same meaning as the original text because I wouldn't know what the original text actually meant. I certainly wouldn’t know if all the information had been conveyed or whether it was structured the best way. The only way I’d know that is if I tried to reduce the text myself and then compared the ChatGPT version to my version, at which point why I would use ChatGPT because I would have already done the work?

2) To create a good summary requires understanding at a deeper level than the level of the summary you are creating – it is very difficult to summarise or teach at the most complex level you understand. Normally you summarise/teach at least ‘one level up’. So if I hand over to ChatGPT to create a summarised version of material, my deepest level of knowledge will be at the summary level.

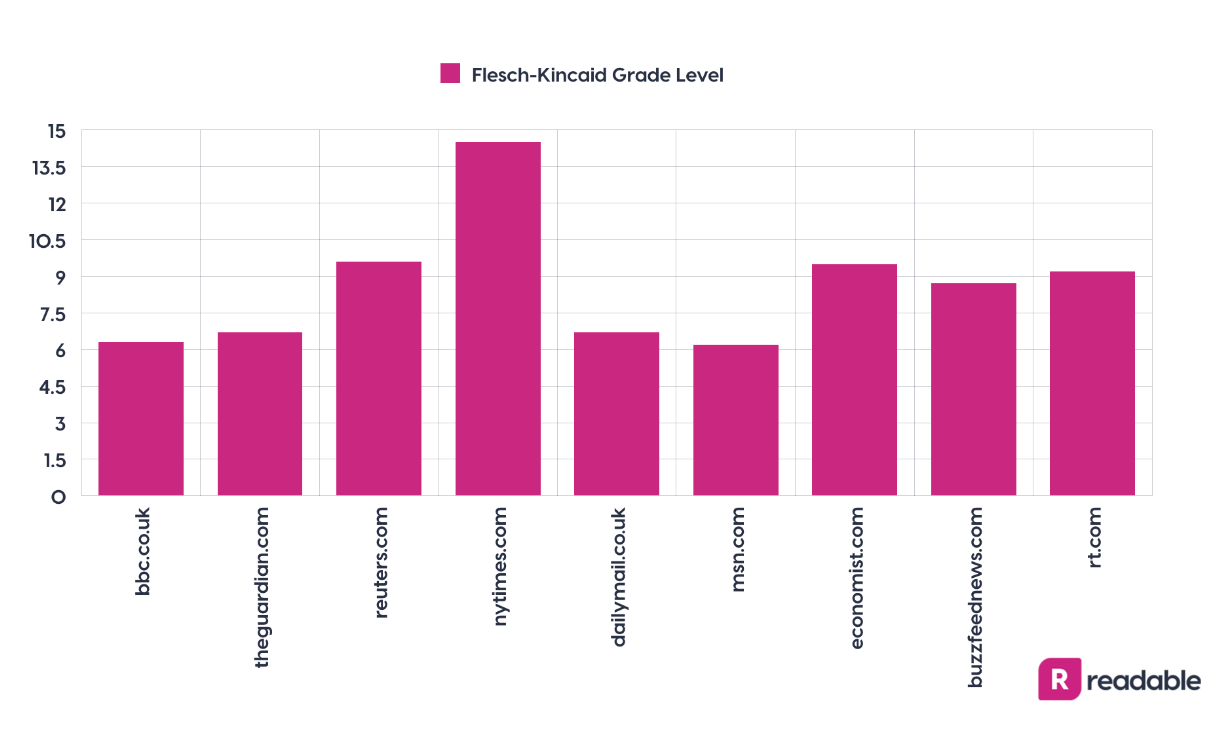

I found this conclusion frightening. Powered vehicles have meant we gradually lose our physical ability to move ourselves from place to place. Now people seek ‘artificial’ ways to maintain/regain physical ability e.g. by going to the gym (often driving there!). In the same way, AI may mean we give away our mental ability to understand information at a deep level in the course of work. Will we then have to find artificial ways of exercising our brains because we have got AI to do the heavy lifting? Will we be reading and answering questionnaires on complex pieces of text as a voluntary exercise so we don’t lose our mental capacities? Or will we not even notice we are losing our ability, in the same way that the reading age media targets has gradually decreased over time without anyone apparently minding.

In the 1940s, paper articles were aimed at college graduates. Flesch and Gunning, readability experts, persuaded the papers to 'increase their readability' to improve circulation. After their work, the majority of large newspapers could be understood by early high school students. However, the goal now is text readable by late primary/intermediate school children. If you want more challenging reading you need to go to the New York Times – see the picture below of the reading age of different news outlets.

My friend ran the argument for using ChatGPT on the basis that we are handing off the drudgery to AI, leaving humans free to do the more interesting work. We don't use slide rules, or books of log tables anymore. This is true and I wouldn’t want to give up Excel to hand draw graphs again (as I did in my first geology degree). It was time consuming and hugely limited the amount of data I could consider. We trust the tools we are accustomed to using.

My friend has written a lobbying report to government for the organics sector and is sure she included far more information in the report because she used AI to find and compile that information, than if she had done everything herself. She agreed the first version of the report, delivered by a combination of using Perplexity (a combination of an internet search tool with a large language model) to source information and ChatGPT4 (a large language model) to compile it, needed further work. However, this process allowed her to rapidly acquire a starting point upon which she could improve.

I saw my friend's point about AI compiling far more information than she had time to gather and assess. However:

- If she wasn’t assessing the information, how could she be sure of its validity? Perplexity does provide references but, when I went looking for those references, I found a lot of them to be summaries themselves and they are limited to what is freely available on the internet. It is very hard to know how valid, or uncertain, the information is when little of it is original source material (the scientist coming out in me).

- Neither model continuously includes new information, they are trained up to a certain point in time (ChatGPT3.5 up to 2022).

- Neither model checks the accuracy of its outputs - all AI models can 'hallucinate' i.e. write some authoritatively that is plain wrong.

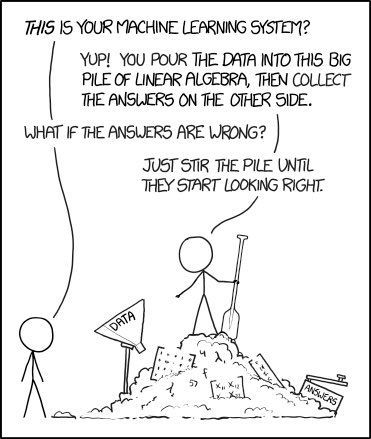

- Most importantly, neither tool analyses data, they just predict the next most likely word in a string of words. Hard to believe given the complexity of the outputs, but still true.

My friend assured me that all things are possible - finding original information, checking uncertainties, using plug-ins to ChatGPT. Clearly I need to try using ChatGPT or other large language models (LLMs) more so I can understand what degree of verification is possible. I am still worried, however, in that LLMs can rapidly produce vast amounts of information that will swamp human brains (it’s bad enough with humans producing information), so we have no chance of independently verifying what we read because there simply won’t be time and there likely will rarely be the inclination.

In the vein of ‘I need to know more’, after I reduced the proposal I was working on to the required length, I had a go at seeing what ChatGPT could do. It reduced the text to a summary without any detail. This is no use for a funding application – assessors need detail not just generics, the trick is figuring out which is the most important detail to provide. I tried again, multiple times, asking ChatGPT to structure the text in different ways in order to include detail. However, I couldn't get ChatGPT to deliver a document that I thought was either appropriate as a research funding proposal nor even particularly interesting – ChatGPT delivered a banal summary.

The failure of my test could be because:

- I wasn’t instructing ChatGPT appropriately. However, it seemed like I would need to know so much about the text to instruct ChatGPT properly I might as well write the text myself myself (confirming my original thoughts, which might mean I am right, or might mean I am biased!).

- I was using ChatGPT3.5 because I don’t yet want to pay for the improved ChatGPT4.

- Because large language models can’t create something much better than a summary, yet.

In the end, I’m left feeling uneasy. Like we are going to give something away and we won’t know what we are losing until it is already gone. Something that is as intrinsic to being a properly functioning human being as is moving one’s body. But maybe I’m just a 19th century Luddite railing against the new machines. I hope so.