First winter snow on Ben Cruachan

This should be a time to celebrate – on the 5th of May the World Health Organisation declared the COVID-19 pandemic is no longer a global emergency (it was announced as a global emergency on 30 January 2020). Polio is the only infectious disease currently considered a global emergency by the WHO. Designation of a global emergency triggers a series of rules in member states that guide response to threatening disease outbreaks, including fast-tracking of tests and medicines. These rules can now be unwound.

However, for the average person, the significance of the WHO declaration feels similarly remote to the way the top of Ben Cruachan feels when I looked at it this morning from just outside our door. COVID-19 is sliding to the back of our brains (unless we are sick with it … still in the ‘not yet’ category for our household) and the next crisis shifts to the forefront.

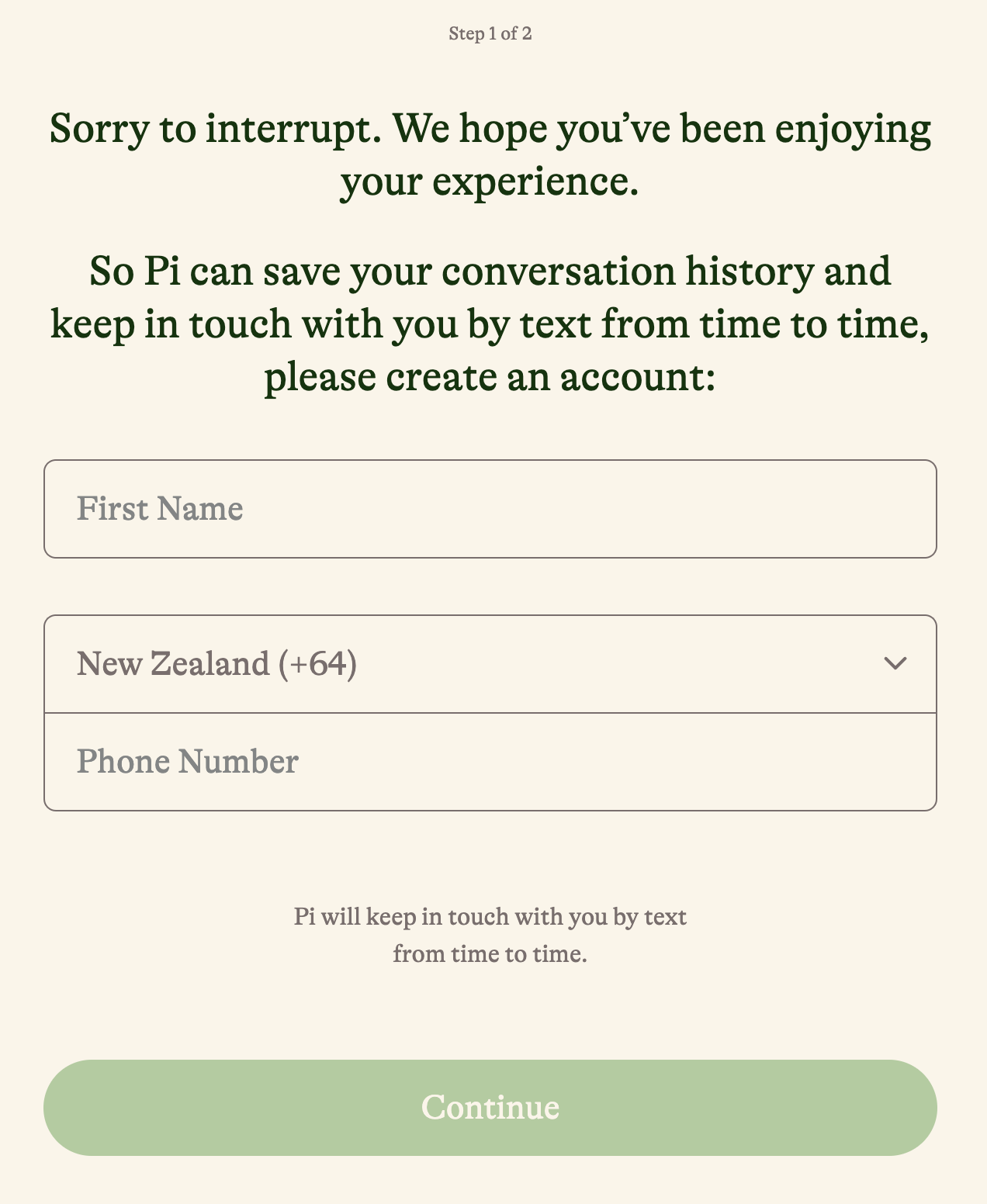

I was alerted to yet another form of AI chatbot this week by a relative who posted their early morning chat with ‘Pi’ (‘Personal intelligence’).

Pi

was released last Tuesday by

Inflection AI

, who introduced the chatbot as the first AI step towards ”

making you happier, healthier and more productive

“. Pi is ”

a supportive and compassionate AI that is eager to talk about anything at any time.

” Inflection AI isn’t telling us about the large language model is on which they are basing Pi. Nor are they telling us what data Pi has been trained on. As far as the ethics of its creation go, you have to trust the creators of Pi. Hmmmm.

Pi has, supposedly, been created in response to the harm being done by social media with its goal of keeping you engaged as long as possible in order to show you as many ads as possible, because that’s the revenue model of social media. So Pi is going to keep you engaged, just with the intent of making you happier etc.? I find this very hard to believe. Pi’s founders, who have recently raised USD225 million to expand their company, are providing Pi out of the goodness of their (and their investor’s) hearts. I don’t think so!

Currently, you can

trial Pi

for free. Inflection AI won’t say at what point it might charge for a subscription. However, the first thing Pi does, after a few lines of conversation, is ask you to sign up with your phone number so it can send you text messages.

I found myself reluctant to hand over my phone number. Goodness knows why, I’ve given it away enough times. But it felt like the thin end of the wedge – this AI is going to do good things for me as long as I give it this very tiny offering … However, in the end I capitulated because I wanted to ask Pi questions in order to write this blog.

I tried asking Pi about the WHO declaration on COVID, because that’s what’s on my mind (see the conversation below). Unfortunately, Pi didn’t have much to say.

I wonder why Pi can’t tell you the specific limits of its information – why shouldn’t it be allowed to check the latest dates of its training material? This ties up with the lack of transparency I referred to above. I was, however, heartened by Pi’s relatively brief statements, compared with ChatGPT. ChatGPT feels like it has been trained to write in the tiresome fashion of many of the poorer writers with whom I work – where the writer thinks that verbosity will hide the lack of insight in their words.

Pi is designed to to offer human-like support and advice, but also to make clear that it’s not actually human. To that end, Pi reflects back to the writer what they have said, a service which humans often fail to do for each other – see the conversation below (my relative’s conversation, not mine).

It’s so easy as a human to fall into the ‘solve problem’ trap, coming up with solutions, rather than letting a person know they’ve been heard. An AI can be programmed to stay away from provision of solutions. An AI can refuse to provide solutions, or only do so if specifically asked. So, does it matter if AIs start to provide ’empathetic’ support, rather than humans? Especially if humans aren’t good at offering support?

The reason for my use of the bleak photograph above is because I fear that lack of human interaction matters, a lot. For a start, how will humans improve at offering support to each other if we stop being trained to do so, through our interactions with others and the consequent feedback loops? There’s only so much time in the day, right? If we are spending time on media or with AI, we aren’t spending time interacting with other humans. We are going to get worse at human interactions and, as a result, likely spend less time interacting with other humans. Okay, does it matter if humans spend less time interacting with other humans?

One definition of society is

an enduring and cooperating social group whose members have developed organised patterns of relationships through interactions with one another.

To have relationships with other people requires spending time and energy relating to

people

. Now we are going to create

human-like

interactions that are not with humans. We are going to create technology humans will interact with on the basis that the technology is

better than humans.

And, although Pi is programmed to tell users its not a human, this type of capability will be used to create AIs that will trick people into thinking they are humans. Shortly, it will be impossible to know on the internet whether you are interacting with a human or a model. Thinking some more, delete ‘shortly’.

Further, AI could be up front about not being human, because people become attached to inanimate objects or computer models very easily. If something results in humans having emotions, the humans will become attached to that something. I came across an example from the University of Auckland where people in retirement villages became very attached to robotic toy fur seals with whom they interacted. There was no question they thought the seals were real.

To me the crucial matter in regard to our interactions with AIs, is that interactions with AIs are not about building a human society. We are already creating the picture of our future – it’s going to be about the network of interactions between humans and AIs, with human-human interactions likely forming an ever decreasing subset of that network. Do you think this matters?

What do you call a cow with no legs?

Ground beef

What do you call a cow with no tail?

A dairy air

What do you call a fish with no eyes.

A fsh

When I asked Pi to tell me some jokes it thought I would like the above is what I got … I don’t think it knows me very well yet. I also don’t think Pi yet understands the difference between the spoken word and the read word in jokes. I’m sure it will soon.

Discover more from Jane Shearer

Subscribe to get the latest posts sent to your email.