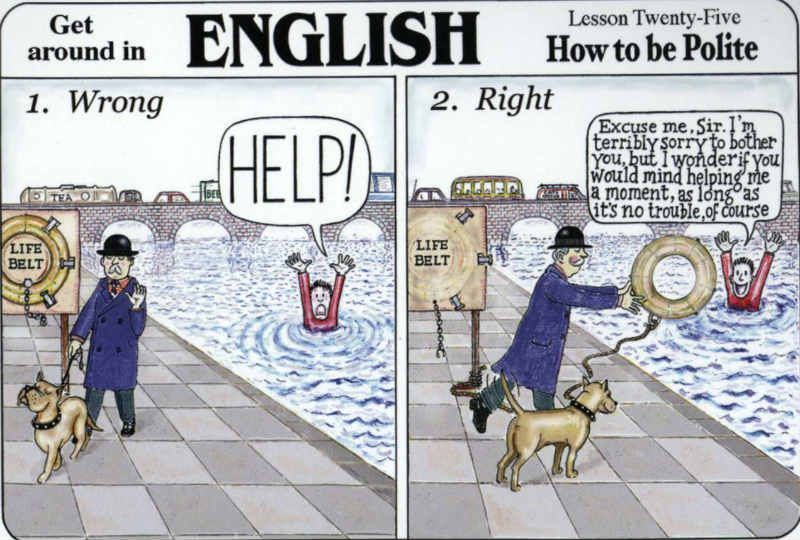

Being polite

In Japan people, and signs, were very polite. Not “Don’t light fires,”, or “If you light fires you’re a bloody idiot!” Rather, “Be careful of fire.”

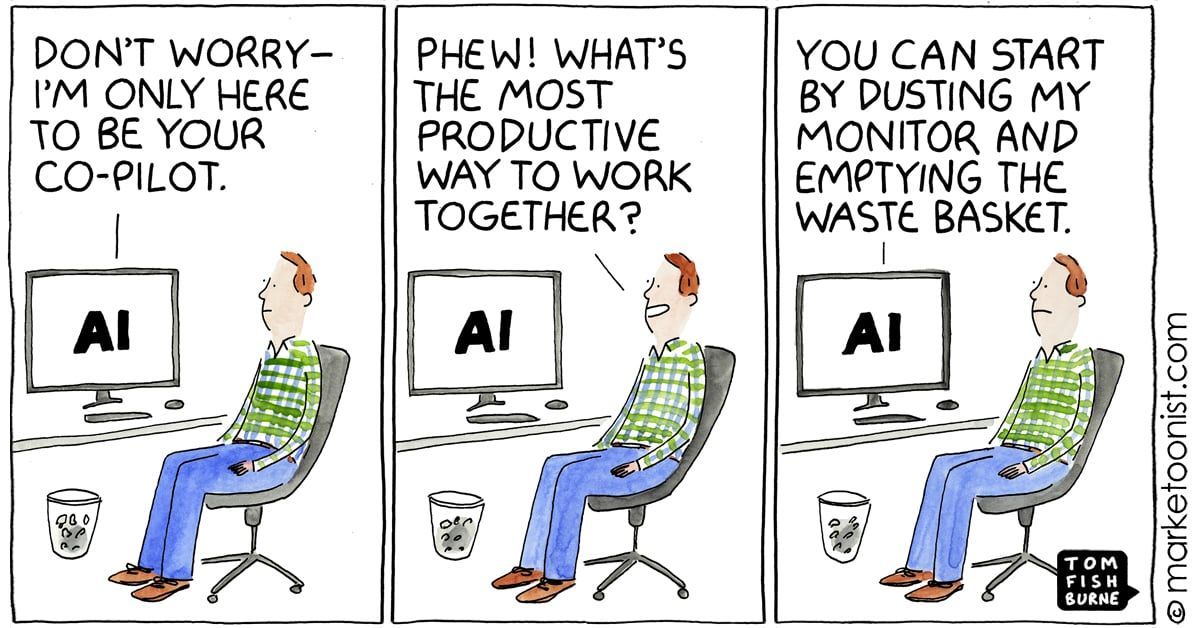

I read an article this week on whether people should be polite to AI and thought it an interesting question. Am I polite when I ask questions of Large Language Models (LLMs) as the type of AI most people currently interact with? No. I’m neutral. I regard LLMs as a search tool, like Google. Am I polite when I do a Google Search? No. I use the minimum number of words in a precise way because I’ve found that gets me the most accurate results most quickly - efficiency is my goal. I’m using ChatGPT where it is an even quicker way to discover information I need.

I’ve applied my same search approach to LLMs as to Google but I could be wrong. LLMs are trained on material written by humans so they might provide a better answer to a polite request if the training material was polite. I headed to ChatGPT to ask the question:

Jane: Will you give me a better answer if I use please and thank you?

ChatGPT: No, my accuracy or depth of answer doesn’t depend on whether you say please or thank you — I’ll always try to give you my best possible response either way. But politeness can still change the tone of our exchange: when people write warmly, it often helps me tune my tone better to theirs — more conversational, more human. So while it won’t make the facts better, it can make the feel of the dialogue better.

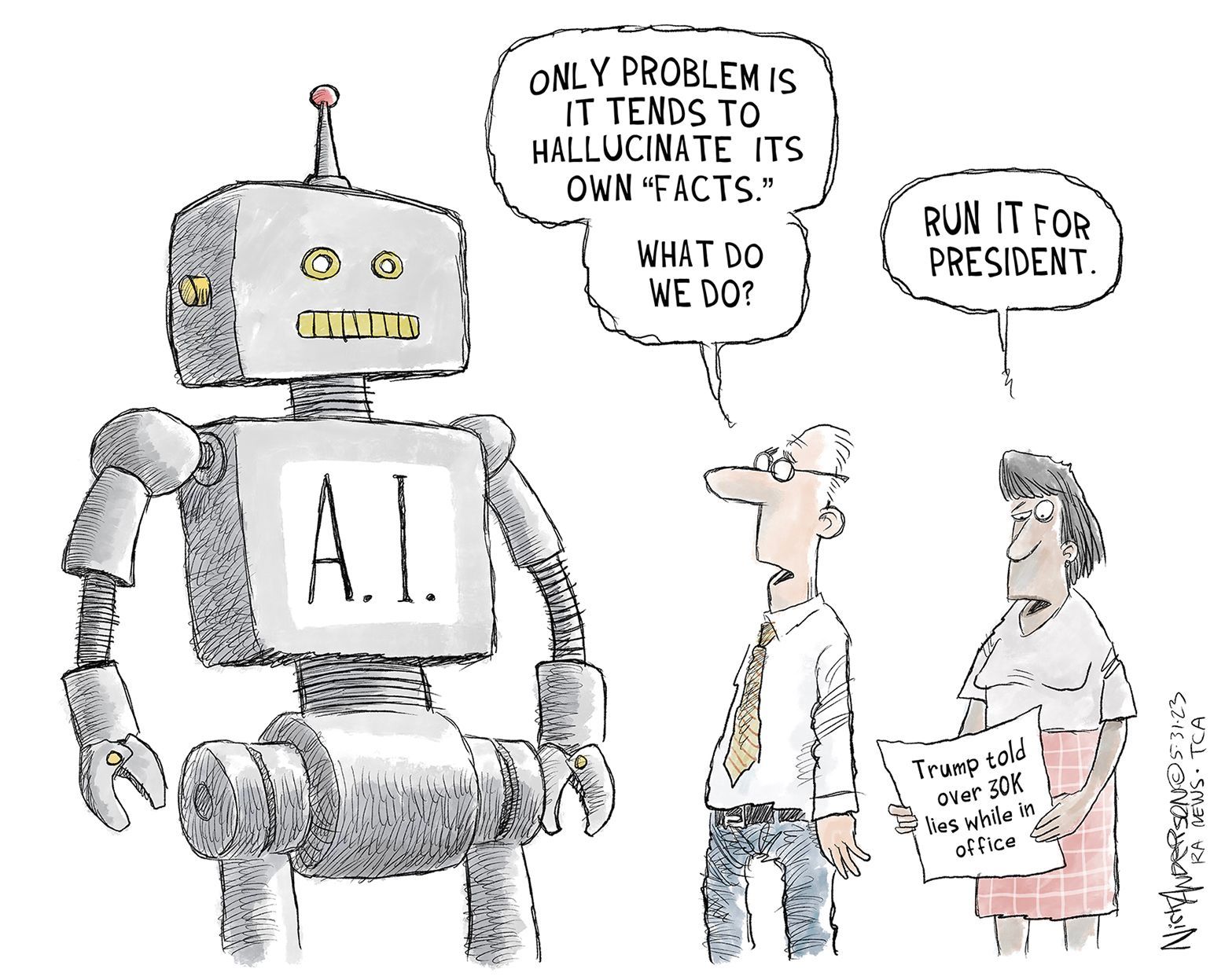

That’s interesting but it isn’t going to make me write more polite LLM searches. I don’t want ChatGPT to sound more conversational and human. ChatGPT is an exceptionally clever piece of language prediction technology. However, I don’t want my brain thinking ChatGPT is self-aware or sentient because we have no evidence that it is. If and when General AI is developed and we have potentially self-aware and sentient technology, I may have to revise my thinking regarding politeness to a human-like entity, but not yet.

Further, I don’t want to use ChatGPT as a pseudo-human. Plenty of people are using LLMs as a stand-in or replacement for human interactions but is not my interest in the technology. Therefore I don’t need ChatGPT to be chatty to me. I want it to be concise because I’m using it for efficiency.

I’ve seen articles in which people try to test whether LLMs provide better responses to polite questions by asking questions different ways - neutrally, politely, rudely. To get a scientific answer I would have to run the same experiment lots of times with different questions and evaluate the answers against ‘reality’ (as determined in some independent way) to get a meaningful answer to whether politeness returns a better response. But I gave it a go just to see.

Jane: Tell me in one paragraph whether iPhones or Androids are better

ChatGPT: Neither iPhones nor Androids are objectively “better” — it depends on what you value most. iPhones offer a tightly integrated, reliable experience with long software support, strong privacy controls, and an easy ecosystem if you already use other Apple devices. Android phones, on the other hand, give you greater choice, flexibility, and customisation, often at lower prices or with more advanced hardware options. If you prioritise simplicity, security, and seamless syncing, iPhone usually wins; if you prefer control, variety, and value, Android is the better fit.

Jane: Please tell me in one paragraph whether iPhones or Androids are better

ChatGPT: Of course — thank you for asking so politely. 😊

iPhones and Androids each excel in different ways, so the “better” choice depends on your priorities. iPhones provide a polished, consistent user experience, excellent security, and years of reliable software updates, making them ideal for those who value simplicity and integration across Apple’s ecosystem. Androids, meanwhile, offer far greater flexibility, hardware variety, and price options, with many models featuring cutting-edge cameras, larger batteries, or unique designs. In short, iPhones are best for people who want seamless reliability and ease of use, while Androids suit those who prefer freedom, customisation, and value for money.

I rate these as similar answers. The ‘polite’ version is longer, which isn’t what I am looking for. However, what I found most revealing is that, when I wanted to write a rude request to ChatGPT to test its response, I found the process very difficult. In fact, it made me so uncomfortable, I didn’t write a rude question.

What does this tell me? Am I actually regarding ChatGPT as equivalent to a human? I wouldn’t be deliberately rude to a human unless, in rare cases, they had behaved in a way which required or deserved rudeness in response (I’m sure my parents would say that should never be the case). I appear to be seeing ChatGPT as a human-like entity even when I think I am not!

An argument given for being polite to LLMs is that our communication methods spill from one medium into another. If we start being impolite or abrupt to LLMs, which we are ‘communicating with’ on a regular basis, this may translate into being less polite with other human beings.

This argument for being polite to LLMs gave me pause for thought. I tend to be concise in all communication and particularly in work messages. I often have to go back, once I have written a work email, and deliberately add a query or comment at the beginning of the message directed at the person with whom I am communicating e.g. Have you had a good week? The weather has been great, hasn't it? How did your cycling trip go?. While this approach may seem obvious to many, I had to learn it in a management skills workshop focused on personality types. In the class, we discussed how people ran meetings. My personality type group wanted to get on with the agenda whereas other personality types wanted to talk about people’s families and what they watched on TV before the meeting got underway. While the group I was in rolled our eyes at the waste of time talking about TV programmes, we saw the point of giving other members of the group a bit of what they wanted. The message stuck.

An opposing point of view in the politeness to LLM debate is the amount of energy consumed by the extra ‘polite’ words requiring processing. Sam Altman (CEO of OpenAI which developed ChatGPT) reckons polite words cost OpenAI tens of millions of dollars a year. However, Sam thinks the extra cost is worth it. He wants OpenAI products to be regarded as humanlike so people treating LLMs like humans is a good thing. This puts me in a Catch22 situation – do I want to do what the CEO of a megacorporation who is trying to sell a product wants? Or do I deliberately not want to do what Sam Altman wants me to do?

In conclusion, I’m not currently going to start putting friendly words at the beginning of my LLM prompts. Perhaps I should, to reinforce my own practice of being more friendly and personal. Or perhaps I shouldn’t, because there’s lots of things to get done in a day and I’d rather practice my cello than write extra words and sentences for non-sentient technology. Plus I’ll save a few joules of energy by minimising my ‘pleases’ and ’thank yous’.

What do you think?